We’ve heared enough about Large Language Models (LLMs) in recent years. Since 2024, we have increasingly heard about AI “agents” or “agentic systems”, and some predict that 2025 will be the year of the AI agent. In addition, (LLM-based) multi-agent systems (MAS) have gained significant attention in research communities as well as industries, with many proposed frameworks such as MetaGPT and AutoGen from Microsoft.

However, the relationships between these concepts are remain unclear for a while for me at least. In this post, let’s demystify them...

LLMs

LLMs are impressive as they can be used directly for a wide range of tasks such as question answering, sentiment classification, or summarization, simply by giving different instructions or input prompt for each task.

This capability can be attributed to the scaling laws, where an extremely large model (i.e., with a huge number of parameters) is trained on vast amount of data using significant computing resources. This is impressive because, before LLMs, we typically needed specialized models for each task.”

Despite their usefulness, LLMs have several limitations. For discussion, we can simply think of an LLM as a function that takes a textual input (input prompt) and returns an textual output (output prompt) after extensive matrix manipulations.

def llm(input_prompt):

# Matrix manipulations

...

return output_promptIn additon to the well-known hallucination issue of LLMs, the main limitations of LLMs are context length, knowledge cut-off, and the stateless nature of LLMs.

- Context length refers to the maximum length of an input prompt, which is limited to certain extent. Normally, a LLM's context length can be found in LLM model card, such as one like Table 1, or in its information page. Although recent advanced models have bean able to increase the context length, it is undoubtfully not unlimited. Additionally, having a large context does not automaticlly translate to improved performance, as it also introduces challenges, such as retrieving relevant information from a lengthy prompt (like finding a needle in a haystack) and dealing with recency bias.

- Knowledge cut-off refers to the fact that an LLM’s knowledge is based on the massive dataset used for training, which is limited to a specific date. Since training data is only available up until a certain point, an LLM cannot provide accurate information about events that have occured after its cut-off date. For example, if we ask an LLM about an event that happend today, it will not have the correct answer.

- LLMs are stateless. As mentioned earlier, an LLM simply takes the current input and generates an output prompt. This means that, on its own, an LLM cannot handle multi-turn conversations, as it does not retain awareness of previous exchanges or context.

User: My name is Guangyuan Piao

LLM: Nice to meet you

User: What is my name?

LLM: Sorry…

With the limitations of LLMs in mind, we can expect that they need additional tools and capabilities to overcome these challenges.

You are right, this is where agents come into play!

We’ve just explored one of the two perspectives on agent : LLM-first view — which sees agents as wrappers around LLMs, enhanced with extra tools and capabilities. In the following section, we will start with the other perspective: agent-first view.

Agents

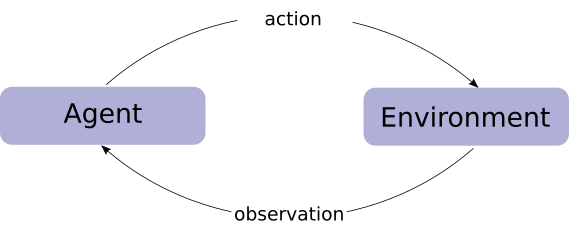

Agents have existed for a long time, even way before LLMs. They interact with a virtual or physiscal environment through actions and observations.

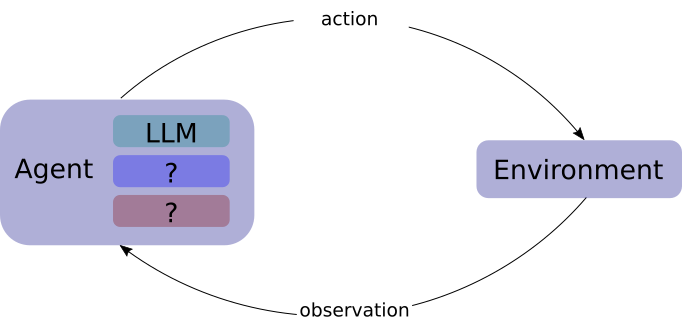

Nowadays, “agent” is an overloaded term. When we talk about agents, it often refers to LLM-based agents or sometimes referred as modern agents. This is the second perspective from historical agent point of view — agent equiped with LLM capabilities such as reasoning.

We’ve just looked at two perspectives of (LLM-based) agents:

- Agent as a wrapper for LLM

- Agent equiped with LLM capabilities

So, what are these added components (question marks in Fig. 2) for agents?

HuggingFace provides a good analogy: an agent has an LLM as its brain and capabilities and tools as its body.

- Tools available to an agent allow it to access new knowledge beyond the knowledge cut-off date, such as retrieving social media data or the current weather.

- Capabilities represent everything the agent is equipped to do. One popular capability example is using a memory component to remember things, such as previous conversations.

The scope of possible actions depends on what the agent has been equipped with. For example, because humans lack wings, they can’t perform the “fly” Action, but they can execute Actions like “walk”, “run” ,“jump”, “grab”, and so on.

— HuggingFace Agents Course

As we can see, capabilities such as using memory help overcome the stateless nature of LLMs, while exploiting tools helps mitigate the knowledge cut-off limitations. These can also help keep current context short by retrieving only the necessary context, for example.

In this sense, we can notice many applications we use nowadays, such as Perplexity or ChatGPT, are chat agents.

In my view, since I like simplify things, capabilities can also be treated as tools that implement these capabilities. For example, tools for interacting with hardware if it is a physical agent, and tools for managing memories. What are your thoughts?

Multi-agent Systems (MAS)

Agents seem to enhance LLMs significantly, with tools and capabilities. However, the brain (LLM) of agents unfortunately needs to perform many additional tasks, such as determining which tools to use, how to use, and how to manage memories. For simple tasks, a single agent can indeed get the job done.

However, as the task becomes more and more complex, a single agent is not enough and does not work well, just like a single person cannot handle a complex project on their own.

We need more people! sometimes each with specialized expertise in different tasks.

Here, the multi-agent systems come into play! Many studies have also found that dividing a task into subtasks and working with more agents, where each tackles a simple subtask, works better for solving complex problems.

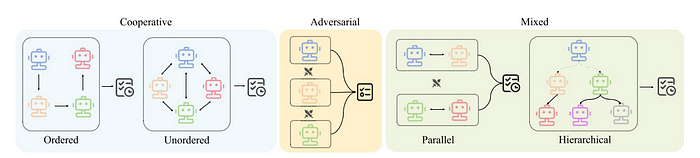

However, it is not as easy as it may seem to simply add more agents. To make multi-agent systems work, there are some challenges.

- How do we manage agents? For example, do we use a set of predefined agents, or do we create them on the fly based on our needs? Can these agents be allowed to evolve — changing their behaviors or personalities (e.g., by modifying their system prompts) on their own?

- How do we enable these agents communicate with each other? For example, as shown in Fig. 3, do the agents communicate in a cooperative manner? Can they communicate with each other in a peer-to-peer fashion or a hierarchical fashion (e.g., ones can only talk to a upper-level manager agent).

Hopefully, you enjoyed the article and it helped clarify the relationships between LLMs, agents, and multi-agent systems. As you might expect, there are many research challenges and opportunities in each of these areas (LLMs themselves, agents, and MAS), and we expect there will be many more advances as the field is moving at such a fast pace.

Discussion

Many tech and research giants have also emphasized that agents are going to play a critical role from this year.

Agents are not only going to change how everyone interacts with computers. They’re also going to upend the software industry, bringing about the biggest revolution in computing since we went from typing commands to tapping on icons. — Bill Gates

I think AI agentic workflows will drive massive AI progress this year. — Andrew Ng

We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. — Sam Altman

The signals are already coming in. As of the time of wrting this article, there is a news about OpenAI reportedly plans to charge up to $20,000 a month for specialized AI ‘agents’. Also, Salesforce CEO mentioned earlier this year that the company does not plan to hire engineers this year because of the success of AI agents created and used by the company. Similarly, Meta CEO Mark Zuckerberg said that AI will replace mid-level engineers by 2025. It is clear that there will be a lot of in-house agents in each company in the near future.

Interestingly, at least to me, we are also going to see “a population of agents” on the Web that can be reused. For example, HuggingFace allows you to share your agent and make it reusable by other people, just like open-source models on the hub. Those agents will be specialized tools to enhance the capabilities of the agents we are building.

Below is a code snippet about pushing a developed agent to the hub, and running the agent from the hub.

# Change to your username and repo name

agent.push_to_hub('sergiopaniego/AlfredAgent')

# Change to your username and repo name

alfred_agent = agent.from_hub('sergiopaniego/AlfredAgent', trust_remote_code=True)

alfred_agent.run("Give me the best playlist for a party at Wayne's mansion. The party idea is a 'villain masquerade' theme") It is definitely a starting year of seeing what agents can offer to our world.

Are you a decision maker responsible for incorporating those agents into your company’s workflows? Are you a developer actively developing agents? Are you a researcher actively working on some of the challenges?

What are your thoughts on agents and experience on implementing agents or agentic workflows?

No comments:

Post a Comment