In a previous post, we discussedsome limitations of LLMs and the relationships between LLMs and LLM-based agents.

One key enhancement that agents bring to LLMs is the memory, which helps overcome the context length limitation of LLMs. But how is memory actually implemented in agents? This is the focus of our post.

First, we will explore a conceptual architecture framework named Cognitive Architectures for Language Agents (CoALA). Then, we will examine a few examples of memory implementations in recent studies, such as MemGPT and Zep.

CoALA, 2023

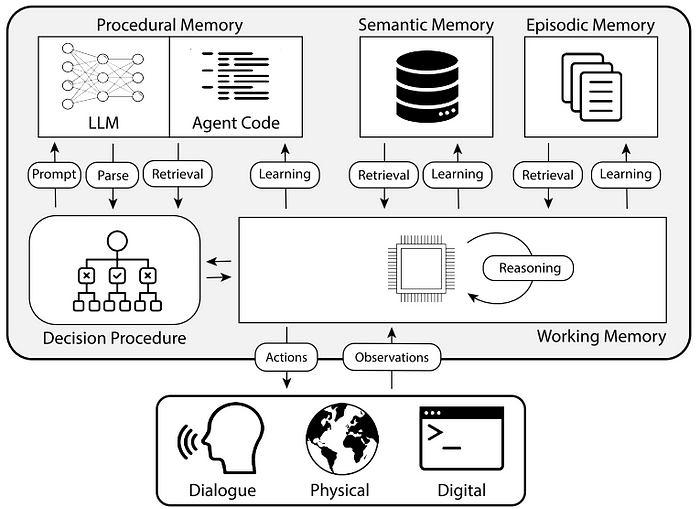

Cognitive Architectures for Language Agents (CoALA) [3] provides a clear conceptual framework for LLM-based agents, featuring two main types of memory as shown in Figure 1:

- working memory and

- long term memory

We can simply ignore other details in Figure 1, as they are not relevant to our discussion in this post.

Working memory stores active and readily available information for the current decision cycle. This includes perceptual inputs, active knowledge (generated by reasoning or retrieved from long-term memory), and other core information carried over from the previous decision cycle (e.g., agent’s active goals).

Long term memory is divided into three distinct types:

- procedural memory consists of implicit knowledge stored in the LLM weights, and explicit knowledge written in the agent’s code. The agent’s code can be further divided into two types: procedures that implement actions (e.g., reasoning, retrieval, grounding, and learning procedures), and procedures that implement decision-making itself.

- semantic memory stores an agent’s knowledge about the world and itself. Retrieval-Augmented Generation (RAG) can be seen as retrieving from a semantic memory of unstructured text, such as Wikipedia.

- episodic memory stores experiences from earlier decision cycles.

With this conceptual framework in mind, let's explore how some aspects of memory have been implemented in recent works. In essence, these approaches aim to retain essential information in the working memory, while storing the rest in the long term memory, with a mechanism to facilitate the information exchange between the two.

MemGPT, 2023

MemGPT [1] proposes an OS-inspired, multi-level memory architecture with two main types of memory:

- Main context (analogous to main memory/physical memory or RAM) refers to the context within the finite context window of an LLM and can be accessed by the LLM during inference (top part of Figure 1)

- External context (analogous to disk memory/disk storage) refers to any information thatis held outside of the fixed context window of LLMs, it consists of archival storage (the MemGPT read/write database storing arbitrary length text objects) and recall storage (the MemGPT message database) in Figure 1.

In the context of CoALA, the main context can be regarded as working memory while the external context funcitons as the long term memory.

Key idea:

FIFO queue in the main context maintains a rolling history of messages, with its first index being a system message that contains a recursive summary of messages removed from the queue.

Queue manager plays a crucial role in managing the main context.

- Managing messges in recall storage and the FIFO queue: It writes incoming messages and the generated LLM outputs to the recall storage. Messages in the recall storage can be retrieved via function calls (handled by the Function Executor in Figure 1) and attached to the main context.

- When prompt tokens exceeding “warning token count” (e.g., 70% of the context window of LLM), the queue manager inserts a warning and allows the LLM to use function calls to store important information from the FIFO queue to working context or archival storage.

- When prompt tokens exceeding “flush token count” (e.g., 100% of the context window), the queue manager flushes the queue to free up space in the context window, and generates a new recursive summary using the existing recursive summary and evicted messages. These evicted messages are stored indefinitely in recall storage and readable via MemGPT function calls.

Zep, 2025

Zep [2] addresses the memory problem by introducing a temporal knowledge graph (KG) as an agent’s memory, which can be considered as a form of long term memory.

Key idea:

The constructed KG consists of three types of nodes:

- episode nodes contain raw input data (messages, text or JSON); episode edges connect nodes (episodes) to their referred semantic entities

- semantic entity nodes represent entities extracted from episodes and resolved to existing graph entities; semantic edges between entity nodes capture relationships between entities extracted from episodes

- community nodes represent groupes of strongly connected entitis (identified via a community detection approach); community edges link communities to their respective entity members.

Temporal aspects of the KG

- The KG construction begins by creating episode nodes, using a bi-temporal model. One temporal aspect represents the chronological ordering of events, while the other tracking the transactional order of Zep’s data ingestion.

- This approach allows for dynamic information updates with temporal extraction and edge invalidation processes. More specifically, the system tracks four timestamps: t’ created and t’ expired (belongs to T’) monitor when facts are created or invalidated in the system, and t valid and t invalid (belongs to T) track the temporal range during which facts remain valid. These temporal data points are stored on edges alongside other facts.

Memory retrieval from KG with three steps:

- Search process: Identifies candidate nodes and edges potentially contaiing relevant information, and returns a 3-tuple (semantic edges, entity nodes, community nodes)

- Reranker: Reorders the search results

- Constructor: Transforms the relevant nodes and edges into text context

The temporal KG can be considered as an implementation of the long term memory in the CoALA architecture, with the memory retrieval mechanism for information exchange between the working memory and the long term memory. Additionally, the semantic memory in the CoALA architecture is broader and encompasses the semantic entity nodes in Zep.

Final Remarks

Memory plays a crucial role in overcoming the inherent context length limitations of LLMs, enabling them to function effectively as part of intelligent agents. The CoALA framework provides a conceptual understanding of memory in LLM-based agents, distinguishing between working memory and long-term memory.

MemGPT and Zep offer concrete implementations of these memory concepts. MemGPT takes an OS-inspired approach, using a combination of a rolling FIFO queue for working memory and external storage for long-term memory, with a queue manager facilitating information flow. Zep, on the other hand, leverages a temporal knowledge graph to structure and retrieve long-term memory, incorporating temporal reasoning to maintain accurate knowledge over time.

As a critical capability of LLM-based agents, many memory mechanisms have been introduced recently [4], and more are sure to come. This GitHub repository contains recent advances in memory mechanisms for LLM-based agents, if you are interested in learning more.

No comments:

Post a Comment