Project page: https://octotools.github.io/

Stanford University introduced a new agentic framework called OctoTools, a training-free, user-friendly, and easily extensible open-source agentic framework designed to tackle complex reasoning across diverse domains.

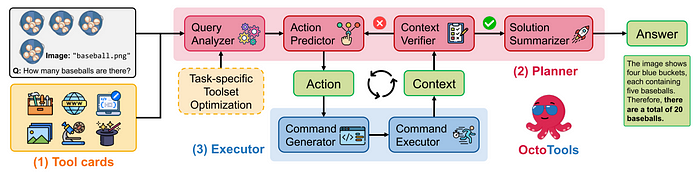

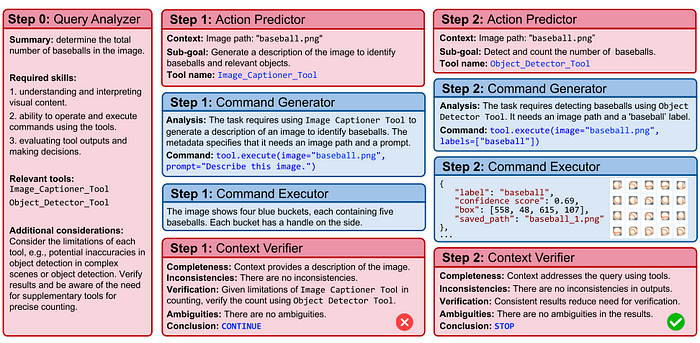

The new framework contains three main building blocks as we can see from the above figure.

- Tool cards

- Planner

- Executor

Key insights

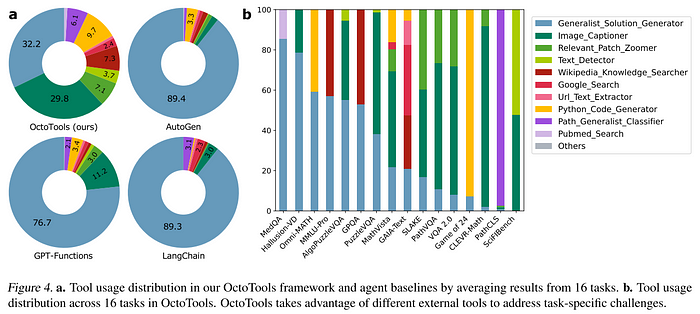

The authors comapared other alternatives including alternative agentic frameworks such as GPT-Functions, LangChain, and AutoGen, using 16 diverse benchmarks spanning two modalities, five domains, and four reasoning types.

- OctoTools is able to take the advantage of available tools better than other alternatives to address task-specific challenges, as illustrated in the tool usage distribution figure below.

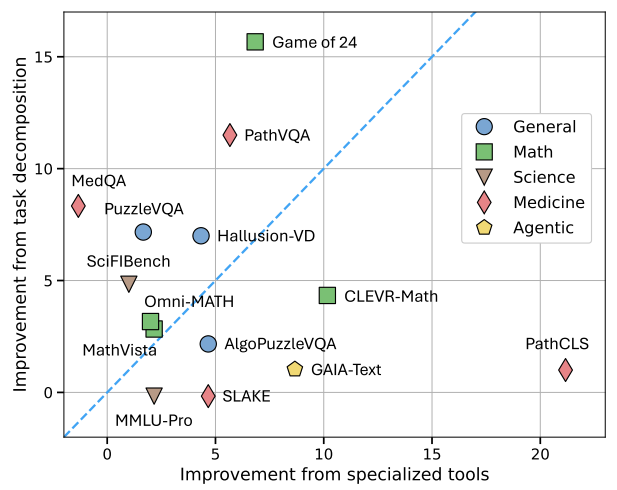

- AI agent frameworks can improve task performance in different ways depending on the specific skills demanded by each task, e.g., those benefit more from step decomposition (above the blue dotted line), specialized tools (below the line), or both (along the line). OctoTools is a versatile framework for achieving such improvements

across a diverse set of tasks.

In the following, we delve into the details of OctoTools and its key components.

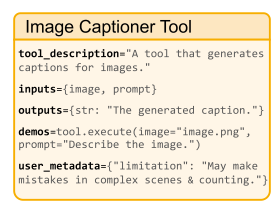

1. Tool cards

Tool cards encapsulate tools in a modular manner, with associated metdata such as inputs, outputs, demos, etc. as illustrated below.

2. Planner

The planner takes the task prompt and a (sub)set of tools as input, to derive the corresponding answer. It contains four components:

- Query analyzer provides a high-level task plan for the given task

- Action predictor outputs an action regarding a sub-goal of the plan

- Context verifier checks if the task is completed. If yes, it goes to solution summarizer; otherwise, it goes back to the action predictor

- Solution summarizer summarizes the final answer once the task is verifed as completed from the trajectory of solving the task.

3. Executor

As we can see from Figure 1, the action predicted by the action predictor is sent to the executor, which instantiates tool calls by generating executable commands and save structured results in the context. The context is fed back to the context verifier.

The executor has two components. A command generator (powered by a language model) to create a command in the form of an executable Python script; a command executor executes that command.

As we can notice here, the authors use a separate language model as a command generator with the rationale introduced as below:

Prior work often expects a single language model both for planning each step (i.e., which tool to use) and for generating the corresponding executable command. This dual responsibility can overload the model and lead to errors, especially when dealing with complex or environment-specific code.

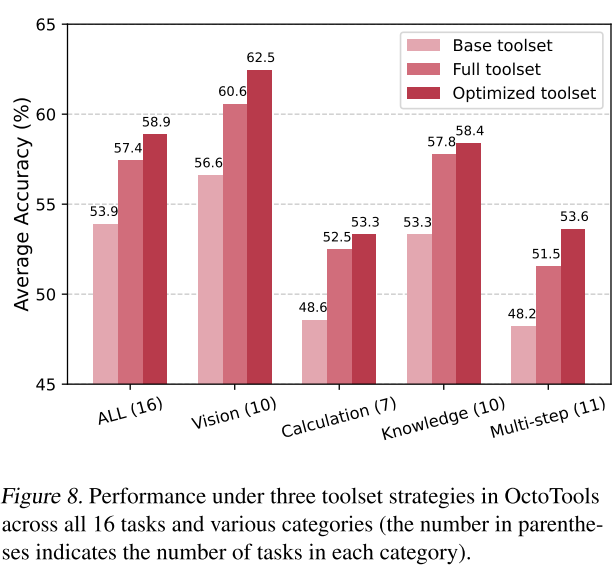

Task-specific toolset optimization

The last component that we didn’t discuss above is the task-specific toolset optimization. For planner, instead of considering the full toolset, a subset may be selected based on 1) expert insights or 2) optimized using a small set of examples. The task-specific toolset optimization — a heuristic — comes in for the second case when a small set of validation examples are available.

In a nutshell, it starts with a pre-defined base toolset, and iteratively adds

new ones by observing the performance on the validation examples. More details can be found in the paper if you are interested in this aspect specifically.

The figure below shows the benefits brought by the optimized toolset with the task-specific toolset optimization. Using the optimized toolset increases the performance over using either base or full toolset. Obviously, the downside of using the optimized toolset would be the preparation of the validation set to apply the optimization.

Hope you enjoyed OctoTools!

See you next time!

No comments:

Post a Comment